Improving High-Frequency Trading: Using Artificial Intelligence, Machine Learning and Software Defined Radios to Break Down Technological Barriers

By Tamara Moskaliuk, Marketing Director, Per Vices

High frequency trading (HFT) has turned into an arms race of acquiring data and executing on it the fastest. With multi-million dollar advantages being achieved through nanosecond differences, trading firms benefit from latency optimizations in link latency and application/algorithm processing latency.

By taking human emotion-driven decision making emotion out of the equation using algorithmic trading, firms can profit by exploiting market conditions indiscernible to humans and in a very small time duration that would otherwise be physically impossible to execute. With trades happening at breakneck speeds, supporters say HFT creates high liquidity and eases market fragmentation. Edging out competition through this “super-human” advantage, opponents say that there is a high risk of exploitation and fraudulent activities such as spoofing, and a high cost of entry into the field due to algorithmic development, hardware/network infrastructure costs and subscription data feeds fees. Even through controversy, high frequency trading is here to stay and continues to push the limits of what is technological possible through integration of new systems, programs, hardware and software.

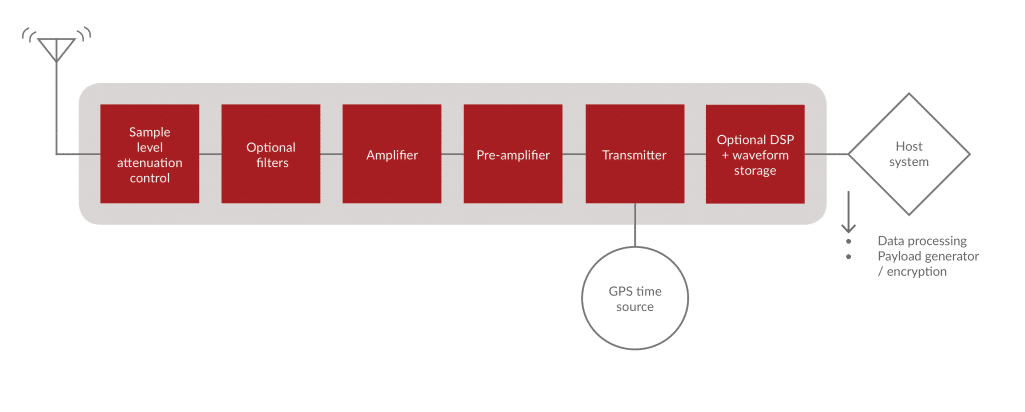

With so much hinging on low latency, one of the latest technologies being leveraged to improve HFT is software defined radio; an advanced, flexible, multi-channel transmit & receive solution that can easily integrate into existing systems and is capable of processing data at the speeds and accuracy needed for high frequency trading. An SDR consists of a radio front-end and a digital back-end with a FPGA (field programmable gate array), which is a reprogrammable chip that allows for the ultra low latency processing of complex algorithms. The FPGA hardware’s parallel architecture makes it a solution for reducing round trip latencies in receiving exchange data and executing trade orders. The flexible and low group delay architecture enables SDRs to offer the lowest latency using HF while combining the radio resources for point to point links with the on-board processing capabilities of the FPGA. Figure 1 shows the structure of an SDR. Top of the line SDRs offer up to 16 channels and include very low latency radio chains and can be customized for trade-offs between latency and reliability per user requirements.

[Figure 1: The composition of an SDR allows for low latency.]

To complement the processing power of SDRs, Machine Learning (ML) and Artificial Intelligence (AI) have been integrated into HFT algorithms and programs. Through the use of support vector machines (SVM), which analyze data for regression analysis and classification, you can have statistical fitting of data, linear programming, optimization, and decision theory. Algorithms can be retrained (unsupervised ML) to learn and spot new patterns and trends and implement the best trading strategies automatically. You can use it to predict the sentiments of people using natural language processing by having it go through market publications, financial journals, opinion pieces, and reports. It is a powerful tool to pick up on subtle trends and nuances through a sea of information and quickly and efficiently analyze and act on the data.

Nonetheless there are trade-offs between hardware driven and software driven designs. To acquire HFT market data in the first place, one needs very efficient networking hardware. High frequency trading network architecture implies the use of ultra-fast network communications, high-performance switches and routers, specialized servers, and operating system optimization, like kernel bypass networking. There can be significant costs associated with sourcing, maintaining and updating hardware, that may provide barriers to entry to firms looking to enter the market. From a software perspective – FPGAs are flexible, and can be reprogrammed to do things such as backtesting – testing new trading algorithms on historical market data to determine if the algorithm would have made the firm more profitable and modifying trade-offs between latency and reliability for trades. They do not have fixed architecture which rely on operating system overhead, interfaces, interrupts and generally the sequential nature found with typical CPUs. In regards to software, Big O notation is used for sorting algorithms, understanding their performance, and choosing the correct algorithm for their situation based on data sets and hardware – or simply how a particular algorithm will perform if its input size grows towards infinity. It is not enough here since every operation/function within the algorithm adds up to create greater computational complexity, thus even if an algorithm is more predictive using several analysis strategies of market data, it will under perform one with fewer analysis strategies due to run time speed. Predictability of opportunities means nothing if you can’t execute a trade fast.

Having optimized software and hardware that are not limiting each other is the way to overcome inefficiencies and blocks that would derail successful trade execution and mitigate errors. A combination of software defined radios, machine learning and artificial intelligence are the way to gain an advantage in trading and further propel technology and the financial markets into the future.

Tamara Moskaliuk possess dual degrees in Finance and in Economics from McMaster University and worked in banking and venture capital before migrating her career to tech startups. She is currently the Marketing Director at Per Vices, a leading manufacturer of high performance and low latency software defined radios.